An attempt at computing dFSCI for English language

In a recent post, I was challenged to offer examples of computation of dFSCI for a list of 4 objects for which I had inferred design.

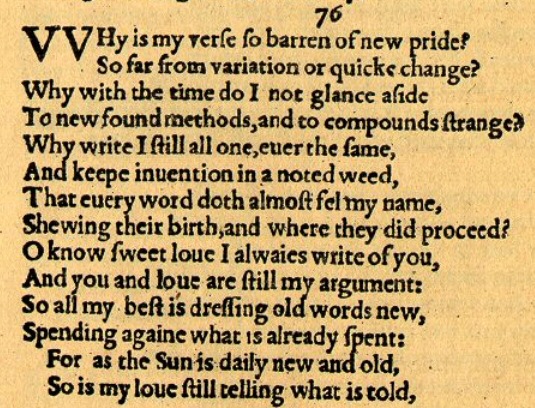

One of the objects was a Shakespeare sonnet.

My answer was the following:

A Shakespeare sonnet. Alans comments about that are out of order. I dont infer design because I know of Shakespeare, or because I am fascinated by the poetry (although I am). I infer design simply because this is a piece of language with perfect meaning in english (OK, ancient english).

Now, a Shakespeare sonnet is about 600 characters long. That corresponds to a search space of about 3000 bits. Now, I cannot really compute the target space for language, but I am assuming here that the number of 600 characters sequences which make good sense in english is lower than 2^2500, and therefore the functional complexity of a Shakespeare sonnet is higher than 500 bits, Dembskis UPB. As I am aware of no simple algorithm which can generate english sonnets from single characters, I infer design. I am certain that this is not a false positive.

Now, a Shakespeare sonnet is about 600 characters long. That corresponds to a search space of about 3000 bits. Now, I cannot really compute the target space for language, but I am assuming here that the number of 600 characters sequences which make good sense in english is lower than 2^2500, and therefore the functional complexity of a Shakespeare sonnet is higher than 500 bits, Dembskis UPB. As I am aware of no simple algorithm which can generate english sonnets from single characters, I infer design. I am certain that this is not a false positive.

In the discussion, I admitted however that I had not really computed the target space in this case:

The only point is that I have not a simple way to measure the target space for English language, so I have taken a shortcut by choosing a long enough sequence, so that I am well sure that the target space /search space ratio is above 500 bits. As I have clearly explained in my post #400.

For proteins, I have methods to approximate a lower threshold for the target space. For language I have never tried, because it is not my field, but I am sure it can be done. We need a linguist (Piotr, where are you?).

That’s why I have chosen an over-generous length. Am I wrong? Well, just offer a false positive.

For language, it is easy to show that the functional complexity is bound to increase with the length of the sequence. That is IMO true also for proteins, but it is less intuitive.

That remains true. But I have reflected, and I thought that perhaps, even if I am not a linguist and not even a mathematician, I could try to define better quantitatively the target space in this case, or at least to find a reasonable higher threshold for it.

So, here is the result of my reasonings. Again, I am neither a linguist nor a mathematician, and I will happy to consider any comment, criticism or suggestion. If I have made errors in my computations, I am ready to apologize.

Let’s start from my functional definition: any text of 600 characters which has good meaning in English.

The search spacefor a random search where every character has the same probability, assuming an alphabet of 30 characters (letters, space, elementary punctuation) gives easily a search space of 30^600, that is 2^2944. IOWs2944 bits.

OK.

Now, I make the following assumptions (more or less derived from a quick Internet search:

a) There are about 200,000 words in English

b) The average length of an English word is 5 characters.

I alsomake the easy assumption that a text which has good meaning in English is made of English words.

For a600 character text, we can therefore assume anaverage number of words of 120(600/5).

Now, we compute the possiblecombinations (with repetition)of 120 words from a pool of 200000. The result, if I am right, is: 2^1453. IOWs1453 bits.

Now, obviouslyeach of these combinations can have n! permutations, therefore each of them has 120! different permutation, that is 2^660. IOWs660 bits.

So, multiplying the total number of word combinations with repetitions by the total number of permutations for each combination, we have:

2^1453 * 2^660 = 2^2113

IOWs,2113 bits.

What is this number?It is the total number of sequences of 120 words that we can derive from a pool of 200000 English words. Or at least, a good approximation of that number.

It’s a big number.

Now, the important concept:in that number are certainlyincludedall the sequences of 600 characters which have good meaning in English. Indeed, it is difficult to imagine sequences that have good meaning in English and are not made of correct English words.

And the important question:how many of those sequences have good meaning in English? I have no idea. But anyone will agree thatit must be only a small subset.

So, I believe that we can say that 2^2113 isa higher threshold for out target spaceof sequences of 600 characters which have a good meaning in English. And, certainly,a very generous higher threshold.

Well, if we take that number as a measure of our target space, what is thefunctional informationin a sequence of 600 characters which has good meaning in English?

It’s easy: theratio between target space and search space:

2^2113 / 2^ 2944 = 2^-831. IOWs, taking -log2,831 bits of functional information.(Thank you todrc466 for the kind correction here)

So, if we consider as a measure of our functional space a number which is certainly an extremely overestimated higher threshold for the real value, still our dFSI is over 800 bits.

Let’s go back to my initial statement:

Now, a Shakespeare sonnet is about 600 characters long. That corresponds to a search space of about 3000 bits. Now, I cannot really compute the target space for language, butI am assuming here that the number of 600 characters sequences which make good sense in english is lower than 2^2500, and therefore the functional complexity of a Shakespeare sonnet is higher than 500 bits, Dembskis UPB. As I am aware of no simple algorithm which can generate english sonnets from single characters, I infer design. I am certain that this is not a false positive.

Was I wrong? You decide.

By the way, another important result is that if I make the same computationfor a 300 character string, the dFSI value is416 bits. That is a very clear demonstration that, in language,dFSI is bound to increase with the length of the string.